After we have gathered subdomains from various sources and by using some cool techniques, we proceed to our next step.

Part #1 –A More Advanced Recon Automation #1 (Subdomains)

Port scanning Link to heading

Yes I know, I know… You want to click this post away, right?

Who uses port scans with bug bounty?

Even tho you might think it is not worth it, and you should move right on to the active scanning parts, port scanning can be very rewarding.

Think for example of a web app running on a different port. E.g. 10001. Would you have noticed it? Would you have found bugs on it? For the people that have thought about it, stick around and you might catch some new stuff. For the rest. Consider scanning for it. As long as it is automated, why not right? Well…

Legal stuff Link to heading

Its kind of a weird topic. You might even end up with legal problems :/ Read more about this here.

I wont go into details here since this post is supposed to be for recon automation. All I can say is have some mercy and don’t scan around like a loose duck.

Also check if the program specifically disallows port scanning etc.

Let’s get started Link to heading

We want to quickly get the open ports and identify the services.

After that we grab the ones speaking HTTP and continue.

The best approach I could think of is to use Masscan to get the open ports, and then run Nmap to identify the services.

I am not going over the installation of those tools, since this is a more advanced tutorial/post.

Masscan Link to heading

To get the open ports using Masscan, we can use:

sudo /path/to/masscan/bin/masscan $(dig +short example.com | grep -oE "\b([0-9]{1,3}\.){3}[0-9]{1,3}\b" | head -1) -p0-10001 --rate 1000 --wait 3 2> /dev/null | grep -o -P '(?<=port ).*(?=/)'

Just make sure to change the –rate and the –wait parameters to your system capabilities. For example when you can do more, you make the rate higher, otherwise you can make it less. The wait argument is a time in seconds it waits for responses. In our case it is set to 3.

The output might look something like this:

If you are not sure what the command does, visit this site.

Just redirect the output into a file, that we will call ports.txt in this example.

Nmap Link to heading

Now that we got the open ports, we go ahead and get the names of the services running on those ports.

A way to do this is by just running a normal Nmap scan on the domain, and to only include the ports we found using Masscan:

nmap -p $(cat ports.txt | paste -sd "," -) $(dig +short poc-server.com | grep -oE "\b([0-9]{1,3}\.){3}[0-9]{1,3}\b" | head -1)

view rawnmap scan after masscan hosted with ❤ by GitHub This outputs something like

Starting Nmap 6.47 ( http://nmap.org ) at 2020-01-33 07:00 CET

Nmap scan report for poc-server.com (11.33.33.77)

Host is up (0.15s latency).

PORT STATE SERVICE

21/tcp open ftp

25/tcp open smtp

26/tcp open rsftp

80/tcp open http

143/tcp open imap

443/tcp open https

465/tcp open smtps

587/tcp open submission

993/tcp open imaps

995/tcp open pop3s

2077/tcp open unknown

2078/tcp open unknown

2080/tcp open autodesk-nlm

2082/tcp open infowave

2083/tcp open radsec

2095/tcp open nbx-ser

2096/tcp open nbx-dir

5666/tcp open nrpe

Nmap done: 1 IP address (1 host up) scanned in 0.67 seconds

Now we either just filter out the ones containing http|https|ssl but this way we might miss some interesting web surfaces running on a different service name.

If you still want to do it that way, here you go 😉

nmap -p $(cat ports.txt | paste -sd "," -) $(dig +short poc-server.com | grep -oE "\b([0-9]{1,3}\.){3}[0-9]{1,3}\b" | head -1) | grep -P "\b(https?|ssl)\b" | tail -n +2 | cut -d '/' -f1

So I created a simple Go script to check if a URL is ‘online’ or not: github.com/003random/online

We just feed urls to this script and it echoes the online ones.

for example:

003random:~/ $ printf "https://web.archive.org/web/20200225122600/https://poc-server.com\nhttps//example.com\nhttps://notexisting003.com\nhttp://google.com" | online

https://poc-server.com

https://example.com

http://google.com

So now instead of relying on the service name, we go ahead and try to GET every combination.

So we send 4 requests for 2 ports.

- http://$domain:$port1

- https://$domain:$port1

- http://$domain:$port2

- https://$domain:$port2 With this we can get the protocol as well.

If both HTTP and HTTPS are not ‘online’ then you can check the port for CVE’s etc. and move on.

If HTTP and HTTPS are both ‘online’ (rarely/never that a ports accepts both), then we check the content length. This scripts basically takes a ports.txt file and creates URLs with protocols and ports from the ports, like we described above: domain=“poc-server.com”

for port in `sed '/^$/d' "ports.txt"`; do

url="$domain:$port"

http=false

https=false

protocol=""

if [[ $(echo "http://$url" | online) ]]; then http=true; else http=false; fi

if [[ $(echo "https://$url" | online) ]]; then https=true; else https=false; fi

if [[ "$http" = true ]]; then protocol="http"; fi

if [[ "$https" = true ]]; then protocol="https"; fi

if [[ "$http" = true && "$https" = true ]]; then

# If the content length of http is greater than the content length of https, then we choose http, otherwise we go with https

contentLengthHTTP=$(curl -s http://$url | wc -c)

contentLengthHTTPS=$(curl -s https://$url | wc -c)

if [[ "$contentLengthHTTP" -gt "$contentLengthHTTPS" ]]; then protocol="http"; else protocol="https"; fi

if [[ "$port" == "80" ]]; then protocol="http"; fi

if [[ "$port" == "443" ]]; then protocol="https"; fi

fi

if [[ ! -z "$protocol" ]]; then

echo "$protocol://$domain:$port"

fi

done

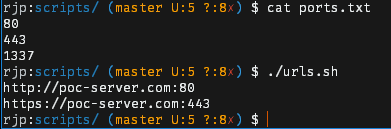

Possible output:

Combine Link to heading

At this moment, we have a commando to get several open ports, contained with Masscan. These open ports are saved into a text file and can be used to get the service with, via Nmap, or to pass the domain with ports to online.go. (you can also do both. Store the services in a services.txt, so you can grep for services later when you found exploits for them)

After we have determined the protocol, we can echo the URLs into a file called urls.txt for example.

Now all that is left is to put this all together in either your main file from last time, or in a new file which automatically creates the ports.txt file and the urls.txt etc.

Then you can just run that script for each subdomain you got from part #1 of this serie, and you have gotten yourself a nice extended scope, with web-services others might not have found.

Tips Link to heading

- You can check for a difference in content length on port 80 and 443. This way you don’t scan on both the same attack surface if they are the same. (Check with a small margin, and if the page is not blank)

- You can check if port 80 redirects to port 443. So HTTP redirects to HTTPS.

- Be creative and add stuff yourself 😉